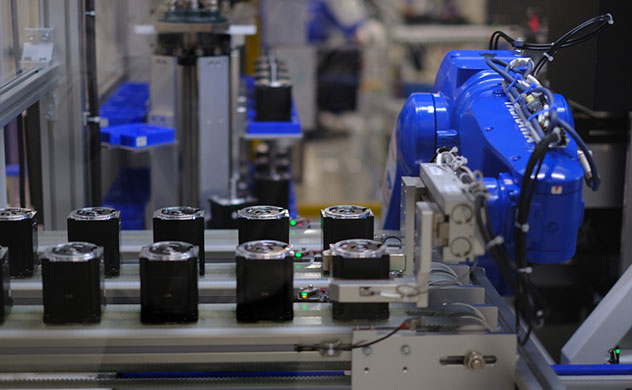

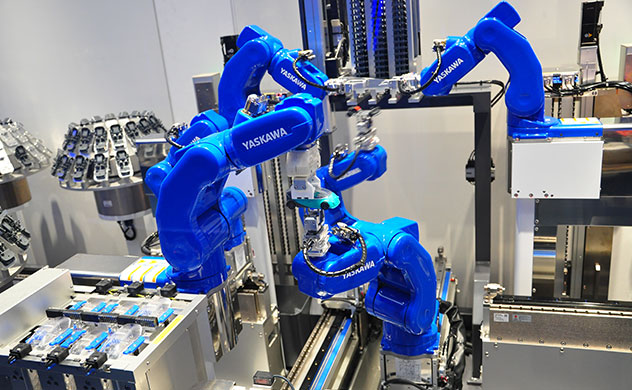

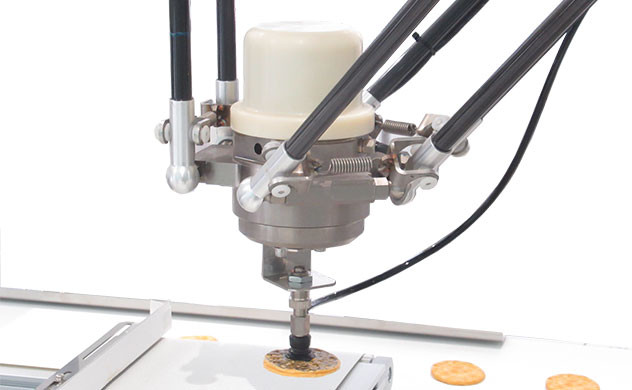

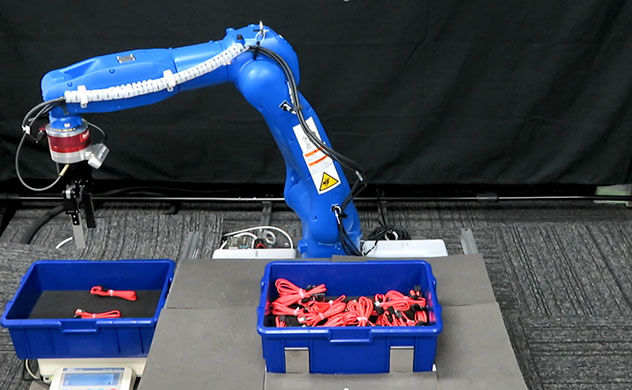

To date, industrial robots equipped with vision systems for bulk picking have been put into practical use because the picking patterns of rigid objects such as engineering parts have been standardized. However, when it comes to picking soft or irregular objects such as cables, there are individual differences, so automation was hard to perform and we had to rely on manual labor.

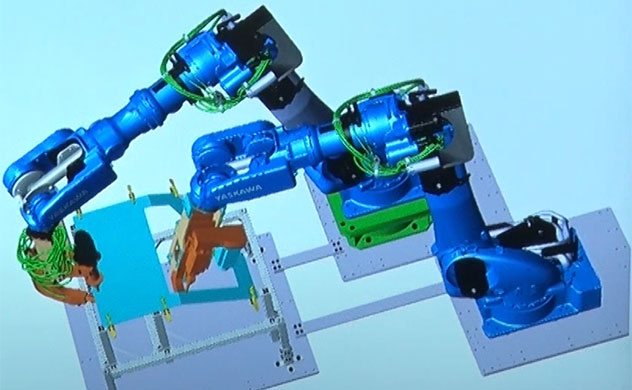

By utilizing the AI technology “Alliom ” that Yaskawa developed, we can create learning data closer to the real environment on the simulator and pick not only rigid objects but also soft objects with the same hand. Since the AI generation process of (1) generation of learning data, (2) learning, and (3) AI generation can be processed on the simulator, the installation time to actual operation, including AI development, is remarkably shortened, and the accuracy of actual operation can also be improve.

For example, in the case of picking parts in bulk, the target parts are brought into the simulator, and AI creates a work environment that is as close to a real environment as possible, including the friction feeling of the parts as well as the angle of the light source in the virtual environment. By using AI to generate a large amount of part data and different ways of stacking parts virtually, the robot hand learns which path and point it can stably hold, and this process is repeated to improve accuracy.

This eliminates the time and cost associated with learning data generated on actual machines, and enables verification and application within 3-4 hours. Ultimately, objects can be taken out with the same hand, from hard parts such as metal to irregular objects such as cables and so on.

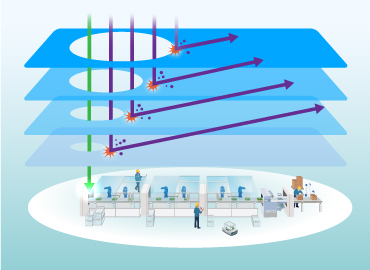

In addition, even in processes such as visual inspection of metal casting, where it takes time to turn into big data because defects are hard to come out, realistic defect data is generated from solely dozens of photos by simulation, and implementable AI is generated. We will expand the scope of application of this technology, including the process of inspecting scratches by linking robots and vision cameras.

Other solutions

-

Production

-

Quality

-

Maintenance